In a groundbreaking development, recent studies published in the prestigious journal Nature have unveiled the remarkable potential of high-performance brain-computer interfaces (BCIs) to decode brain signals, effectively restoring the ability to speak for individuals who had tragically lost this fundamental means of communication.

These revolutionary advances are not only awe-inspiring but also offer a ray of hope to countless people facing the challenges of paralysis due to various conditions, such as brainstem strokes and neurodegenerative diseases like ALS.

Ann Johnson's Journey to Regain Speech

Ann Johnson's journey serves as a poignant illustration of the remarkable possibilities that BCIs hold. Over 18 years ago, Ann, a mother, wife, and dedicated schoolteacher, suffered a devastating brainstem stroke that left her severely paralyzed.

Her ability to speak remained inaccessible despite regaining limited control over her facial muscles for expressions. However, in 2021, inspired by the story of Pancho, a paralyzed individual who utilized BCI technology to convey text-based speech, Ann reached out to researchers at UCSF, setting the stage for the incredible breakthroughs that would follow.

Google DeepMind/ Pexels | The power of AI and BCIs lies in their ability to translate thoughts into words, giving a voice to the silent and empowering them to express themselves

Decoding Attempted Speech

The latest study on August 23 in Nature showcases the substantial advancements in BCI technology. Led by coauthor David Moses, PhD, from the UCSF Weill Institute for Neurosciences, this research achieved several groundbreaking milestones.

Notably, the technology exhibited remarkable speed, approximately five times faster than previous iterations, allowing Ann to communicate at a pace of approximately 78 words per minute, expanding her vocabulary to encompass over 1,000 words. However, the most awe-inspiring development was the direct translation of brain signals into audible speech, transcending the realm of text-based communication.

Translating Brain Signals Into Audible Speech

Unlike previous studies that converted brain signals into text, this research went further by synthesizing speech directly from Ann's brain activity. The team succeeded in decoding the brain's representations of speech sounds, transforming them into actual verbal speech in Ann's voice.

Notably, they employed video footage from Ann's pre-injury wedding to create a personalized voice profile, adding an extra layer of authenticity to her digital avatar.

How BCIs Make Speech Possible

To grant Ann her virtual voice, a thin rectangular array of 253 electrodes was implanted onto specific brain areas critical for speech production. These electrodes intercepted brain signals for the muscles controlling speech and facial expressions. These signals were then relayed to computers through a cable, ultimately forming the basis for Ann's speech communication system.

Google DeepMind/ Pexels | BCIs represent a future where communication knows no bounds

Training AI Algorithms for Speech Decoding

The development process involved extensive training of artificial intelligence algorithms to recognize Ann's unique brain signals for speech. This training phase included repeating various phrases from a 1,024-word conversational vocabulary until the computer could accurately identify the brain activity patterns associated with the basic sounds of speech.

Digital Avatars and Real-Time Speech

Moreover, Ann was privileged to select a digital avatar to represent her. When she silently attempted to speak, the AI model translated her brain activity into animated words the avatar spoke, complete with appropriate expressions for emotions like happiness, sadness, or surprise.

Ann must physically attempt to speak the words, as the system derives signals from the speech motor cortex, enabling direct communication without relying on the damaged brainstem.

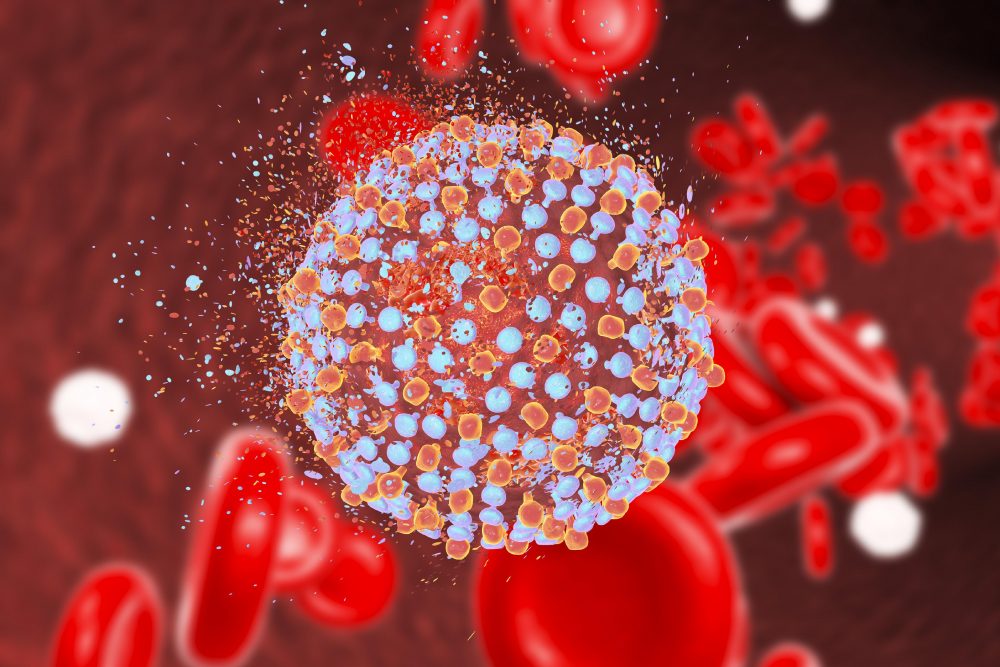

Implanted Device Detects Individual Neuron Activity

In a parallel study featured in Nature, Stanford researchers made strides in brain-computer interface technology by implanting electrodes deeper into the brain to detect individual neuron activity in Pat Bennett, who battled ALS. Pat's unique challenge involved a rare onset of ALS in her brainstem, affecting her ability to control the muscles necessary for speech.

MART PRODUCTION/ Pexels | BCIs are the bridge that reconnects the mind to speech

Decoding Speech in Real-Time

The implanted sensors, coupled with advanced decoding software, translated Pat's attempts at speech into words displayed on a screen. An AI algorithm learned to distinguish distinct brain activity patterns associated with the 39 phonemes composing spoken English. This breakthrough paved the way for real-time speech decoding, approaching the natural conversation pace.

Transforming Lives With BCIs

The implications of these groundbreaking studies are profound. Devices like these have the potential to restore the power of speech to those who have lost it due to conditions like ALS, brainstem strokes, or injuries. Improved accuracy and speed in speech decoding may enable individuals to hold regular jobs, maintain relationships, and enjoy a fuller life.

A Personal Mission

Erin Kunz, co-lead author of the Stanford study, shares a personal connection to this work, driven by her father's battle with ALS and the loss of his ability to speak. She emphasizes the profound impact this technology can have on enhancing the quality of life and facilitating everyday communication for nonverbal individuals.